What would happen if you could put a trillion sensors all over planet Earth, monitoring everything from weather patterns to highway traffic? Imagine the ability to track energy use at an incredibly fine scale, or to detect earthquakes with enough warning time to save lives. Hewlett-Packard has been working on a system like this for several years now, one that would deploy sensors about the size of a matchbook that have enough computing power and radio reach to report in on their findings. They’re not all that powerful on their own; their strength is in their sheer numbers.

The idea goes back to something once dubbed "smart dust" that was envisioned as a cloud of sensors so tiny that they would, to the naked eye, be little more than dust motes. Rather than plugging macro-scale computing into a given situation to monitor it, you would scatter smart dust in profuse quantities. You can imagine scenarios where technology like this would be used for security purposes, not to mention surveillance, a scary thought in the era of Edward Snowden. Would smart dust’s dangerous uses outweigh its advantages once introduced into society?

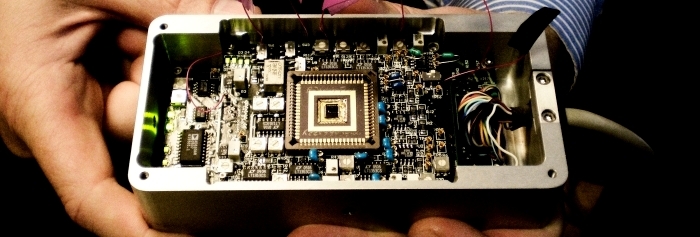

It’s early days in the "smart dust" era, but a trend is emerging. HP is working with Royal Dutch Shell to deploy an inertial sensing technology that will be used with the same kind of accelerometer you find in a smartphone, although a thousand times more sensitive. A million of its sensors would be put to work in the cause of oil exploration by measuring the movement and vibration of rock over an area covering six square miles. Testbed deployments like these begin the transition of tiny sensor networks out of the laboratory and into real world situations.

HP has already described future sensing nodes as small as a pushpin, perhaps attached to buildings or bridges to pick up on signs of dangerous structural strains. Of course, such networks would be adaptable by software, fine-tuned to monitor life-support equipment in hospitals or seek out dangerous chemicals in the food supply. One day a smart dust network’s combined computing power might enable it to recognize the person using it, allowing unique interaction between man, machine and the environment.

Sensors that tap into the senses of taste and smell yet are small enough to clip onto a mobile phone are under active investigation (imagine waving your phone over produce to check its freshness). And even smaller gadgets are doubtless on the way. At the University of Michigan, computers the size of snowflakes are under study, with prototypes a single cubic centimeter in size. Dubbed "Michigan Micro Motes," the rapidly shrinking devices can track data through customized sensors and report the results by radio waves. A key issue is power: How do you keep a network of thousands, if not millions, of tiny snowflake sensors operational?

If the Michigan team can get its Micro Motes operational and as small as they ultimately want to make them, there’s talk of using solar energy to power them up, perhaps with embedded solar panels. Another possibility: Running off the heat energy that can be produced by temperature extremes. But what I wonder about is what to do with all that data. We already know from huge projects in physics and biology that the problem isn’t in producing data but in making sense out of the result. HP says a million sensors running 24 hours a day would capture 20 petabytes of data in six months. That’s 20 million gigabytes that has to be not only collected but thoroughly analyzed.

So let’s say we get to where we can run monitoring operations over the surface of the planet to keep people safer and understand large-scale issues like climate change and the health of our oceans. At the same time, tiny sensors turn our smartphones into interactive tools that not only recognize us but perform essential tasks like checking our surroundings for germs or recognizing faces and whispering their names into our ears. That science fictional outcome will be backed by vast computing resources in the cloud that can make sense of all this data.

Even more bizarre is what researchers at UC Berkeley are pondering, a "neural dust" that would essentially consist of implantable dust-sized wireless sensors to enable brain-machine interfaces. Researchers would be able to track brain function at a level of detail that staggers the imagination, while potential uses for victims of paralysis or other diseases could bring back a level of motor control to their lives, even if it’s mediated by an external robotic arm or wheelchair.

We’re a long way from having a widely available consumer product that will allow us to control a computer by thought alone, the first issue being to design and build neural dust particles this small (on a scale of about 100 micrometers) and learning how to implant these in the human cortex. But you can see that the long-term prospects for ultra-miniaturization are staggeringly useful. Time will tell which of these bears fruit, but the larger issue is that we are moving toward what many call an "Internet of Things" that will push computing power into the objects around us.